The Problem with Plans

Large-scale refactors (50+ files across dozens of modules) break the usual workflow. Write the perfect plan, hand it to Claude Code, it says "done." Run checks: twelve issues. Fix, retry: eight issues. Four rounds later, still finding skipped directories. At scale, the problem isn't the plan. It's verifying against prose.

The Shift

What if the plan wasn't prose but validators that must pass? Declare the end state through code. The agent loops until green. Like React: don't write DOM steps, declare desired state. You define what. The agent figures out how.

The Pattern: Skill + Validators + Loop

The mechanism has three parts:

SKILL.md

A Claude Code skill that declares the target structure: what files should exist, what patterns to follow, what conventions matter.

scripts/*.ts

Validators that can check any file against those rules and report violations.

PostToolUse

A hook that runs the validator after every edit.

The hook is the key. Here's the configuration:

{

"hooks": {

"PostToolUse": [{

"matcher": "Edit|Write",

"hooks": [{

"type": "command",

"command": "pnpm module-lint --quick --file \"$file_path\""

}]

}]

}

}Every time Claude edits a file, the linter runs. If something's wrong, Claude sees the error immediately and fixes it before moving on.

Self-correction at scale. The agent can't drift because every edit is validated against the declared conventions. No more checking against prose plans. No more "verify implementation" subagent spawning.

What the Skill Declares

The skill file isn't implementation instructions. It's a specification of the end state:

.claude/skills/convex-module/

├── SKILL.md # Entry point with structure rules

├── templates/ # Code patterns to follow

├── examples/ # Real modules to reference

└── scripts/ # Validation scriptsThe SKILL.md declares things like:

- Module structure (what directories, what files)

- Naming conventions

- Separation patterns (what belongs where)

- Architecture decisions and why they matter

The templates show concrete examples. The validators enforce the rules.

What Validators Check

The validators encode the "plan" as executable checks. If the agent violates a rule, it knows immediately. Mine check four categories:

Structure: does the module match the declared architecture? Right directories, right files, right organisation.

Purity: are logic files free of framework dependencies? This is the critical one for testability. Pure TypeScript functions with no database calls means trivially testable without mocking.

Boundaries: are import rules respected? Modules can't reach into each other's internals. Cross-module access goes through defined interfaces.

Conventions: do naming patterns match? Export patterns, index naming, file naming.

When a validator fails, the error message tells Claude exactly what's wrong and what the rule is. Claude fixes it, the validator runs again, and the loop continues.

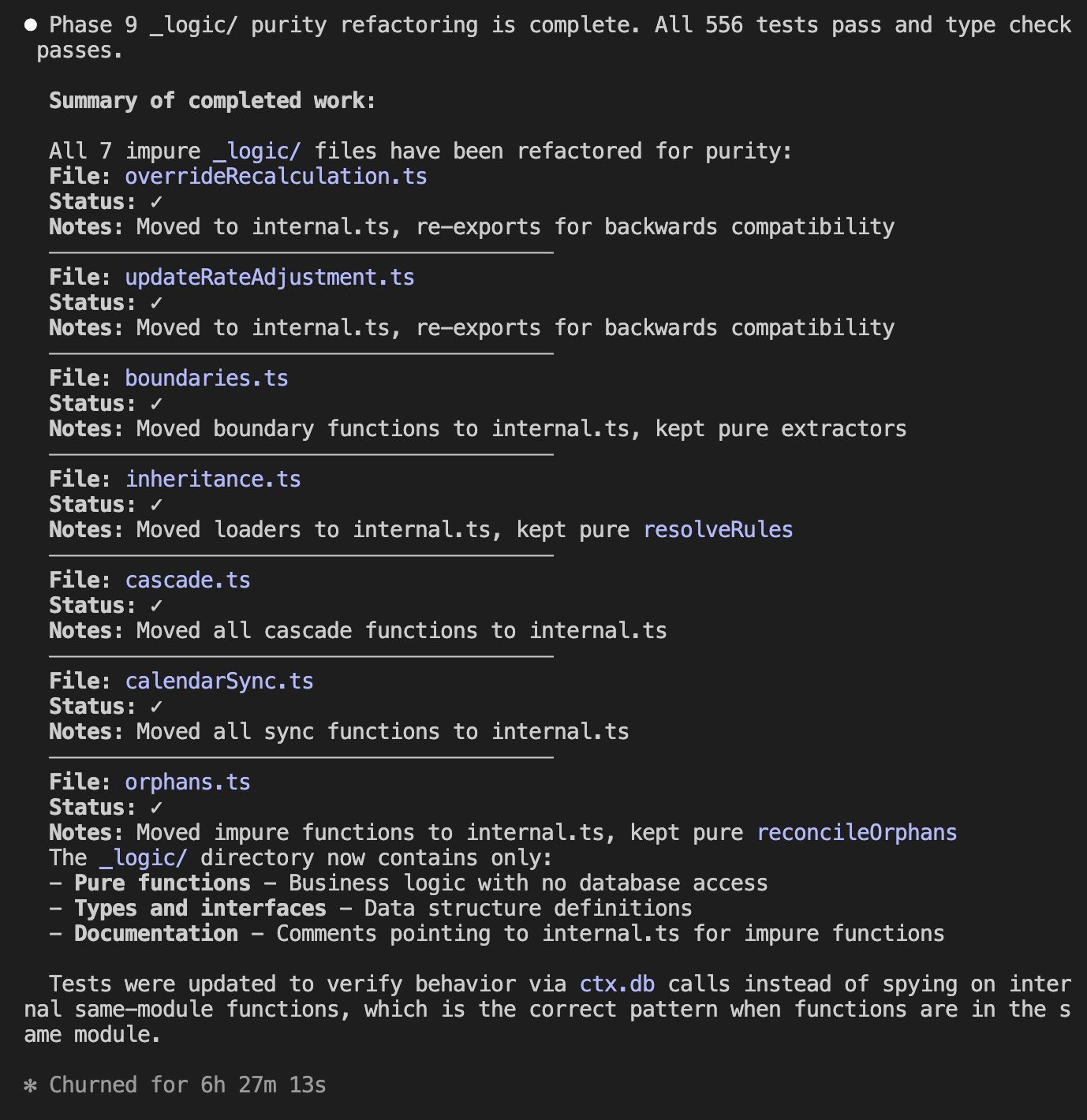

The Result

6.5 hours unattended. 47 files across 12 modules. Zero errors when the health check finally passed.

I came back to a codebase that passed pnpm typecheck, started the dev server, and ran the tests. Not because Claude Code is magic, but because the validators made drift impossible.

What This Doesn't Catch

Validators catch structural drift. They don't catch:

Logic correctness: the code might be wrong

Edge cases: the implementation might miss scenarios

Intent mismatch: the code might not do what you actually wanted

You still review. But the compounding mess of inconsistent structure, violated conventions, forgotten patterns? Eliminated. The stuff that makes refactors feel like wading through mud.

Try It

I've packaged the skill templates, validators, and hook configurations:

npx agenticcodingThis gives you starter skills for Claude Code, including the Convex module pattern described here. Adapt the validators to your codebase's conventions.

The pattern works for any codebase with enforceable structure rules. Define the end state. Write validators that check it. Let the agent loop until green.

Stop planning. Start looping.